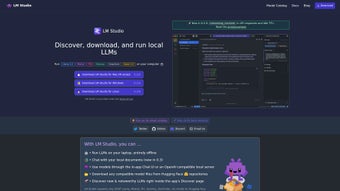

Explore Local LLMs with LM Studio

LM Studio is a versatile web application designed for users interested in experimenting with local and open-source Large Language Models (LLMs). It allows seamless discovery, downloading, and running of ggml-compatible models from Hugging Face. The application features an intuitive user interface for model configuration and inferencing, making it accessible for users at various skill levels. Its cross-platform compatibility ensures that it can operate across different operating systems, providing flexibility to users regardless of their device.

One of the standout features of LM Studio is its ability to utilize available GPU resources, enhancing performance during model execution. Users can run LLMs offline, ensuring privacy and accessibility without internet dependency. The app supports various models like Llama 2, Orca, and Vicuna, and includes a Chat UI for direct interaction with the models. Additionally, users can easily download compatible model files from Hugging Face repositories, streamlining the setup process.